Artificial Neural Networks

May 4, 2024Artificial Neural Networks (ANNs) Learning Machines Inspired by the Brain

Artificial neural networks (ANNs) are a powerful class of machine learning algorithms loosely inspired by the structure and function of the human brain. They consist of interconnected nodes, called artificial neurons, that process information and learn from data. ANNs have revolutionized various fields, including image recognition, natural language processing, and financial forecasting, by enabling machines to learn complex patterns and relationships from data.

Key Concepts:

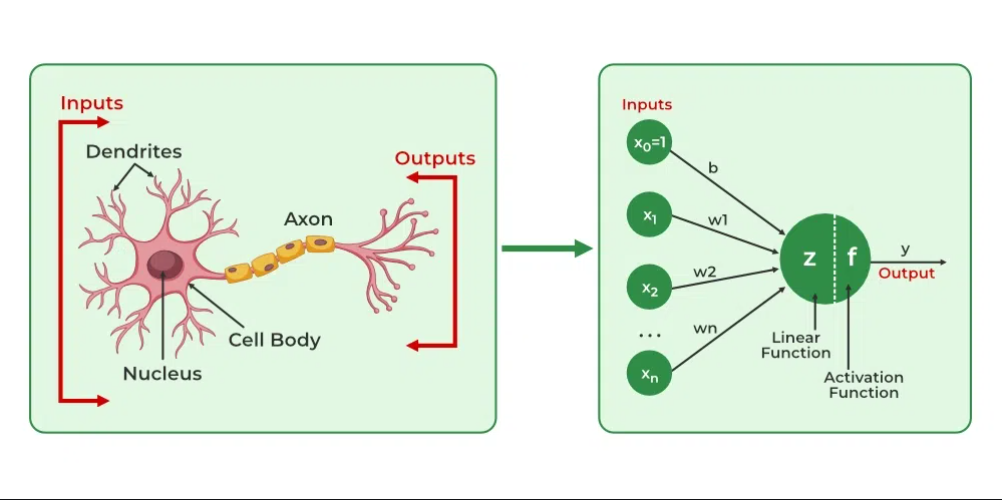

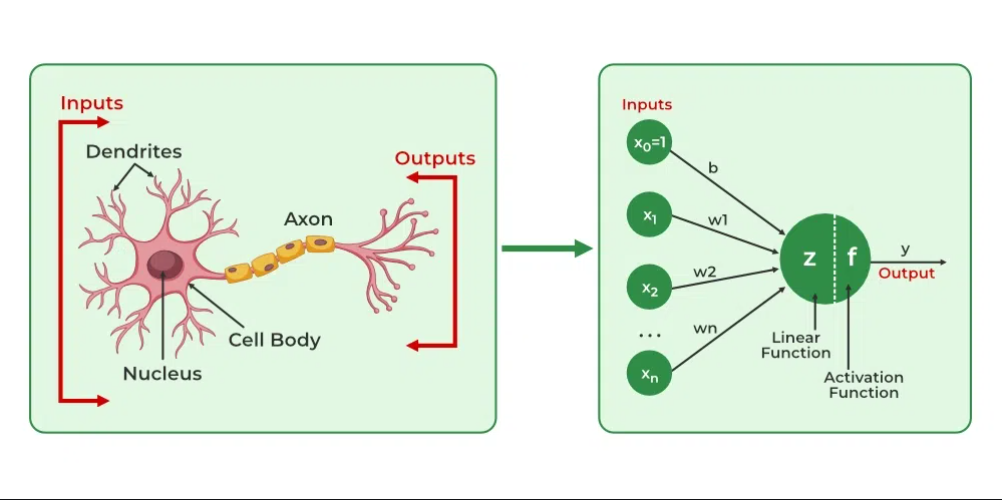

- Artificial Neurons: The fundamental unit of an ANN is the artificial neuron. Each neuron receives input from other neurons, applies a mathematical function (activation function) to transform the input, and then outputs a signal to other neurons.

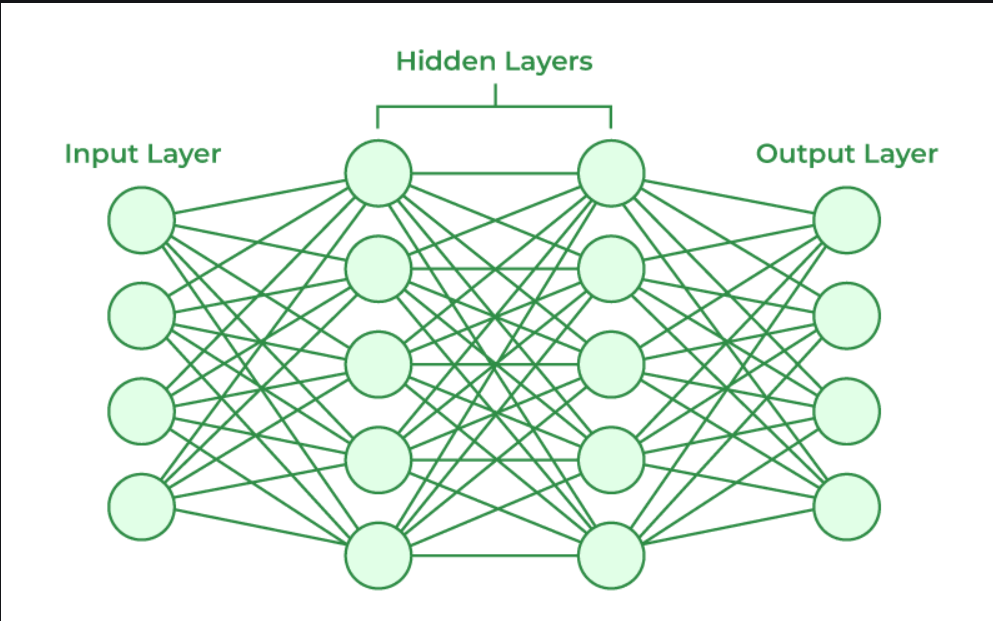

- Layers: ANNs are organized into layers: an input layer, hidden layers, and an output layer. The input layer receives raw data, hidden layers process and extract features, and the output layer produces the final prediction or classification.

- Learning: ANNs learn through a process called backpropagation. During training, the network is presented with labeled data (data with known outputs). The network’s predictions are compared to the actual labels, and the errors are propagated backward through the network. The weights and biases of the connections between neurons are adjusted to minimize the error. This iterative process allows the network to learn and improve its performance.

- Weights and Biases: These are numerical values associated with each connection between neurons and play a crucial role in learning:

- Weights: These represent the strength of the signal transmitted from one neuron to another. A higher weight signifies a stronger influence. During training, weights are adjusted through backpropagation to improve the network’s performance.

- Biases: These are constant values added to the weighted sum of inputs received by a neuron. They act like a threshold, influencing whether a neuron is activated or not. Biases are also adjusted during training to optimize the network’s output.

Types of ANNs:

ANNs can be broadly categorized into two types based on the direction of information flow within the network:

Feedforward Networks:

- Information Flow: Information travels in one direction, from the input layer through hidden layers (if present) to the output layer. Imagine data flowing down a waterfall. Data enters the network, is processed layer by layer, and the final output is produced.

- Learning: Training happens through backpropagation as described earlier. The network learns from its mistakes and fine-tunes its responses based on the error signals propagated backward.

- Applications: Widely used for tasks like image recognition, speech recognition, and classification problems.

Feedback Networks:

- Information Flow: Information can flow in both directions, allowing for continuous learning and adaptation. Think of a loop or a circular flow of information. The network can receive new data, process it, and use the output to further refine its internal parameters.

- Learning: Feedback networks can learn online, meaning they can adjust their weights and biases as they receive new data, making them suitable for dynamic environments.

- Applications: Used for tasks like controlling robots, time series forecasting, and recurrent neural networks (RNNs) for language translation and sentiment analysis.

Choosing the Right Network:

The choice between a feedforward or feedback network depends on the task at hand:

For static tasks with well-defined inputs and outputs, feedforward networks are often sufficient.

For dynamic tasks requiring continuous learning and adaptation, feedback networks are better suited.

Structure of ANNs

Artificial neural networks mimic the brain’s structure with interconnected processing units called artificial neurons. These artificial neurons are organized in layers: an input layer that receives raw data, hidden layers that process and extract features from the data, and an output layer that delivers the final results. Each neuron within a layer connects to neurons in the subsequent layer, and the strength of these connections, called weights, determines how much influence one neuron has on another. Information flows through the network, with each layer transforming the data until the output layer produces the desired prediction or classification. This layered structure allows ANNs to learn complex patterns and relationships within data.

Structure of ANNs

Similarities Between Human Brains and ANNs:

- Structure: Both brains and ANNs are composed of interconnected processing units (neurons in brains, artificial neurons in ANNs) that transmit signals to each other. These connections form networks that allow for complex information processing and learning.

- Learning: Both brains and ANNs learn through experience. Brains learn through a process called Hebbian learning, where connections between neurons are strengthened when they are repeatedly activated together. ANNs learn through backpropagation, where the network adjusts its internal parameters to minimize errors in its predictions.

- Parallel Processing: Both brains and ANNs can process information in parallel across multiple units. This allows them to handle complex tasks efficiently.

Circuit Board Brain: Analogy for an ANN

Advantages:

- Learning from Data: ANNs can learn complex patterns and relationships from data without the need for explicit programming. This makes them well-suited for tasks where traditional rule-based approaches are impractical.

- Adaptability: ANNs can adapt to new data and changing environments. As they are exposed to more data, they can continuously improve their performance.

- Fault Tolerance: Due to their distributed architecture, ANNs exhibit a degree of fault tolerance. Damage to a single neuron or connection may not significantly impact the network’s overall performance.

Disadvantages:

- Data Intensity: Training ANNs can require large amounts of labelled data. Acquiring and labelling data can be time-consuming and expensive.

- Black Box Problem: The complex internal workings of ANNs can make it difficult to understand how they arrive at their decisions. This lack of interpretability can be a challenge in applications where explainability is critical.

- Computational Cost: Training large and complex ANNs can be computationally expensive, requiring significant processing power and time.

- Unique Points:

- Biomimicry: While not replicating the human brain exactly, ANNs draw inspiration from its structure and function. This biomimicry approach offers a powerful framework for machine learning.

Applications Beyond Classification:

ANNs are not limited to classification tasks. They can also be used for regression (predicting continuous values), dimensionality reduction (compressing data into a lower-dimensional space), and other applications.

Transfer Learning: Pre-trained ANN models can be leveraged for new tasks by transferring the learned knowledge from a related domain. This can significantly reduce training time and improve performance on new tasks with limited data.

Future Work:

Research on ANNs is ongoing, focusing on addressing their limitations and expanding their capabilities. Areas of active exploration include:

- Explainable AI (XAI): Developing techniques to make ANNs more interpretable and understandable.

- Neuromorphic Computing: Designing hardware specifically for running ANNs, aiming for improved efficiency and power consumption.

- Lifelong Learning: Enabling ANNs to continuously learn and adapt throughout their operational lifetime.

Conclusion:

Artificial neural networks are a versatile and powerful tool for machine learning. Their ability to learn from data, adapt to new information, and handle complex problems positions them at the forefront of artificial intelligence research. As researchers continue to address challenges like data requirements, interpretability, and computational efficiency, ANNs hold immense potential to revolutionize various fields and shape the future of intelligent systems.