TensorFlow vs. PyTorch

February 5, 2025Philosophy and Ecosystem

TensorFlow is built for both research and production, developed by Google. It offers tools like TensorFlow Hub (pre-trained models), TensorFlow Lite (mobile), and TFX (production pipelines). Initially graph-based, TensorFlow 2.0 introduced eager execution, making it more user-friendly.

PyTorch, developed by Facebook, emphasizes flexibility and dynamic graph execution. It’s preferred for experimentation and research. Though initially focused on researchers, it now offers TorchServe for production and PyTorch Lightning for scaling models.

Key Difference: TensorFlow focuses on production scalability, while PyTorch emphasizes rapid prototyping and flexibility.

Coding Style: Basic Example

TensorFlow

python

Copy code

import tensorflow as tf

from tensorflow.keras import layers, models

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

model = models.Sequential([

layers.Flatten(input_shape=(28, 28)),

layers.Dense(128, activation=’relu’),

layers.Dropout(0.2),

layers.Dense(10, activation=’softmax’)

])

model.compile(optimizer=’adam’, loss=’sparse_categorical_crossentropy’, metrics=[‘accuracy’])

model.fit(x_train, y_train, epochs=5)

test_loss, test_acc = model.evaluate(x_test, y_test)

print(f’Test Accuracy: {test_acc}’)

PyTorch

python

Copy code

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

train_loader = torch.utils.data.DataLoader(datasets.MNIST(‘.’, download=True, transform=transforms.ToTensor()), batch_size=64, shuffle=True)

test_loader = torch.utils.data.DataLoader(datasets.MNIST(‘.’, download=True, transform=transforms.ToTensor()), batch_size=64, shuffle=False)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.fc1 = nn.Linear(28*28, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = x.view(-1, 28*28)

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return torch.log_softmax(x, dim=1)

model = Net()

optimizer = optim.Adam(model.parameters(), lr=0.001)

for epoch in range(5):

for data, target in train_loader:

optimizer.zero_grad()

output = model(data)

loss = nn.CrossEntropyLoss()(output, target)

loss.backward()

optimizer.step()

correct, total = 0, 0

with torch.no_grad():

for data, target in test_loader:

output = model(data)

correct += (output.argmax(dim=1) == target).sum().item()

print(f’Test Accuracy: {correct / len(test_loader.dataset)}’)

Code Comparison:

- TensorFlow: Uses a higher-level API with methods like model.fit() and model.evaluate() for training and evaluation.

- PyTorch: Requires manual control of training loops and optimization, providing flexibility for experimentation

Deployment and Production

TensorFlow excels in deployment with tools like TensorFlow Lite (for mobile), TensorFlow.js (for web), and TFX (for production pipelines). These make TensorFlow ideal for large-scale production environments.

PyTorch, while initially research-focused, now offers TorchServe for deployment and PyTorch Mobile for mobile applications, making it competitive in production, though TensorFlow has a more mature ecosystem

Community and Documentation

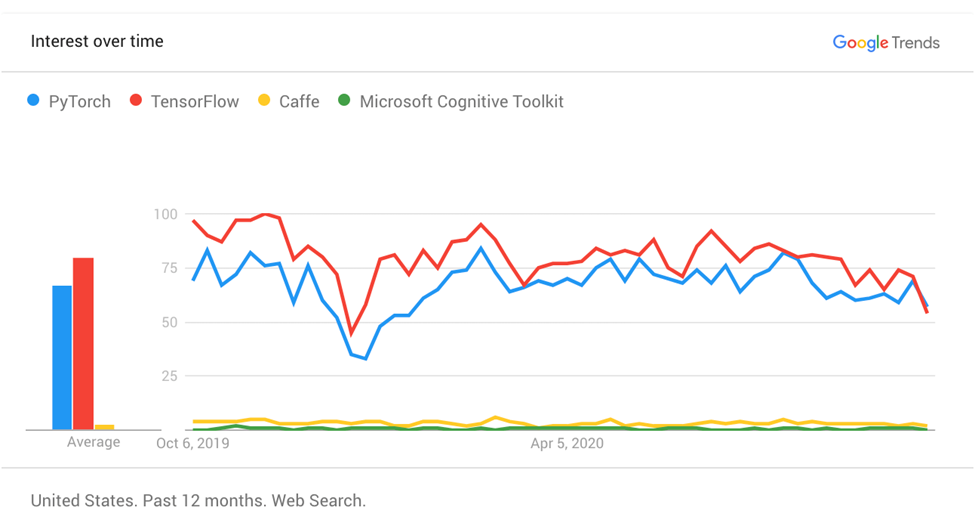

TensorFlow has a larger community and extensive resources due to its earlier start. Its ecosystem includes more tutorials and production-focused tools.

PyTorch is growing rapidly, especially in academic circles. Its dynamic nature and ease of use attract many researchers. Both frameworks have excellent documentation.

Performance

- TensorFlow typically outperforms in large-scale distributed systems, especially with multi-GPU setups.

- PyTorch is favored in research environments and smaller-scale tasks due to its dynamic graph and easier debugging.

Which to Choose?

- Choose TensorFlow if you need production-ready deployment tools and scalability.

- Choose PyTorch if you’re focused on research, rapid prototyping, or prefer a more intuitive, Pythonic approach.

Conclusion

Both TensorFlow and PyTorch are powerful deep learning frameworks. Your choice depends on whether you prioritize deployment or flexibility for experimentation. TensorFlow excels in production, while PyTorch remains the preferred framework for research and innovation.